Part 2/3: Creating a Markdown Q&A ChatBot: Chunking Documentation Using LangChain and OpenAI; then creating Embeddings

In part one of this series, we downloaded the TemporalIO Java documentation and transformed it into markdown files. If you haven't checked that out, go see this post. In this part, we will focus on chunking the documentation using LangChain and OpenAI and then we will create embeddings to store in our Pinecone index. If you're only interested in the full code, check out my GitHub repo and give it a star!

Let's get this show on the road...

2a. Chunking Documentation Using LangChain and OpenAI

In this section, we will dive into chunking the documentation up using LangChain's MarkdownHeaderTextSplitter. Because the documentation has been transformed into a markdown file, we can decide how we want to split up the chunks. I decided to split up the chunks by headers.

Step A: Importing Necessary libraries

from langchain.text_splitter import MarkdownHeaderTextSplitter

import os

We import the

MarkdownHeaderTextSplitterclass from thelangchain.text_splittermodule. This class helps in splitting a markdown document based on its headers.The

osmodule is imported, which will be used to traverse directories and handle files.

Step B: Choosing what to split on

I decided to choose to split the documents up by the # Header 1 and ## Header 2:

headers_to_split_on = [

("#", "Header 1"),

("##", "Header 2")

]

Step C: Get all the MarkDown files

def get_markdown_files():

markdown_files = []

for root, dirs, files in os.walk("text"):

for file in files:

if file.endswith(".md"):

markdown_files.append(os.path.join(root, file))

return markdown_files

We initiate an empty list

markdown_filesto store paths of all markdown files.Using the

os.walk("text")method, we traverse the "text" directory.For every file we encounter, if its extension is ".md" (indicating it's a markdown file), we add its full path to the

markdown_fileslist.The function returns the populated

markdown_fileslist.

Step D: Split the MarkDown files

def split_markdown_files(markdown_files):

markdown_splitter = MarkdownHeaderTextSplitter(headers_to_split_on=headers_to_split_on)

split_docs = []

for file in markdown_files:

# Read the markdown file

with open(file, "r") as f:

markdown_text = f.read()

md_header_splits = markdown_splitter.split_text(markdown_text)

split_docs.append(md_header_splits)

return split_docs

We initialize an instance of

MarkdownHeaderTextSplitternamedmarkdown_splitterwith our defined headers.split_docsis an empty list to store split versions of our documents.We loop over each markdown file in the

markdown_fileslist.Within the loop, each markdown file is opened and its content is read into the

markdown_textvariable.We then use

markdown_splitter.split_text(markdown_text)to split the markdown content based on the specified headers.The split content (

md_header_splits) is appended to thesplit_docslist.

Finally, the function returns the

split_docslist, containing split versions of our original markdown documents.

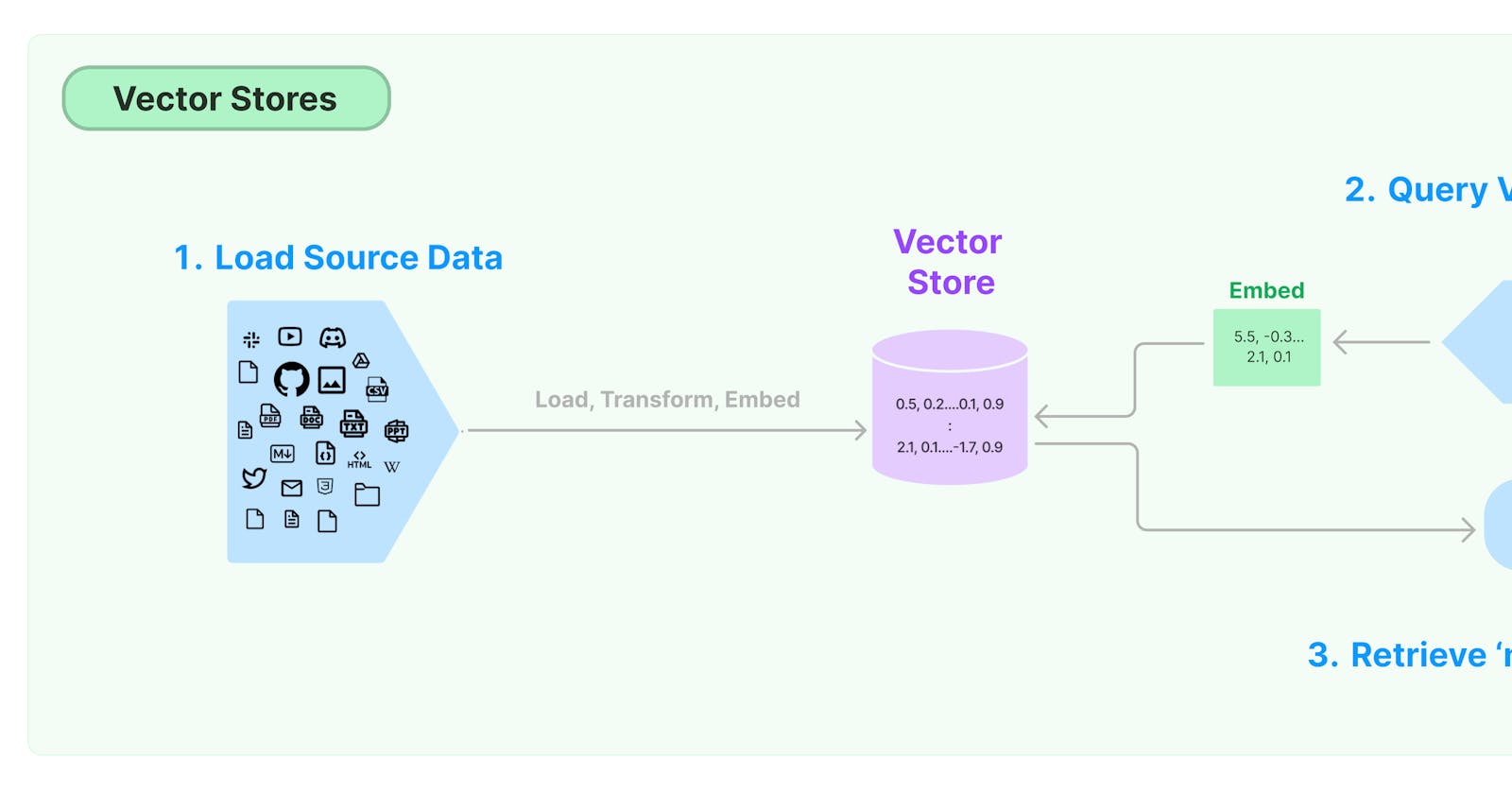

2b. Create embeddings to store in Pinecone

In this section, we will discuss how to create embeddings and store them in our Pinecone vector store.

Step A: Import necessary libraries

import importlib

import openai

import pinecone

import os

from dotenv import load_dotenv

from langchain.vectorstores import Pinecone

from langchain.embeddings.openai import OpenAIEmbeddings

importlibis for dynamic module import functionality.openaiandpineconeare SDKs for OpenAI and Pinecone respectively.osallows interaction with the operating system.dotenvlets you load environment variables from a.envfile.The last two imports are specific functionalities from the

langchainlibrary related to storing vectors in Pinecone and embeddings using OpenAI.

Step B: Set API Keys from env variables

load_dotenv()

openai.api_key = os.environ["OPENAI_API_KEY"]

Loads environment variables from a

.envfile usingload_dotenv().Sets the OpenAI API key.

Step C: Initialize and prepare Pinecone index

pinecone.init(

api_key = os.environ["PINECONE_API_KEY"],

environment = os.environ["PINECONE_ENV"]

)

index_name = os.environ["PINECONE_INDEX_NAME"]

if index_name not in pinecone.list_indexes():

print("Index does not exist, creating it")

pinecone.create_index(

name=index_name,

metric='cosine',

dimension=1536

)

# Initialize OpenAIEmbeddings

embeddings = OpenAIEmbeddings()

# import the chunk-docs.py file

chunk_docs = importlib.import_module('chunk-docs')

# Get the markdown_files and then the chunks

markdown_files = chunk_docs.get_markdown_files()

split_docs = chunk_docs.split_markdown_files(markdown_files)

This initializes Pinecone with the necessary API key and the environment, both sourced from environment variables.

We get the

index_namefrom the environment variables.We then check if the Pinecone index with that name already exists.

If not, a new index is created using

pinecone.create_index()with the given name, cosine metric, and a specified dimension.Initialize the OpenAIEmbeddings

import the

chunk-docs.pyand call the necessary functions to get the chunkedDocuments

Step D: Process and store in Pinecone Index

for doc in split_docs:

vector_store = Pinecone.from_documents(doc, embeddings, index_name=index_name)

The documents are passed to

Pinecone.from_documents()along with the OpenAI embeddings and the index name.This method presumably processes each document, generates embeddings using OpenAI, and stores those embeddings in the specified Pinecone index.

Conclusion

To summarize, the code we've written:

Detects all markdown files within the "text" directory.

Splits each of those markdown files into chunks based on the specified headers (in this case, # and ##).

Returns a list of these split documents for further processing or analysis.

Retrieves and splits markdown files into text chunks.

Converts those text chunks into embeddings using OpenAI.

Stores the embeddings into a Pinecone index for further retrieval and similarity operations.

Next, we will be covering how to put this all together and create a StreamLit app where we can chat with the embedded documents! If you're interested in the full code, check out my GitHub Repo and give it a star!